The 3D industry has been buzzing about Nvidia’s Instant NeRF (which stands for neural radiance fields) ever since they published their first demo video.

This technology has since been in rapid development and we now have a free iPhone app called Luma AI that anyone can use to capture NeRFs.

I tested the app with a toy robot, here’s a automatically generated video of the result:

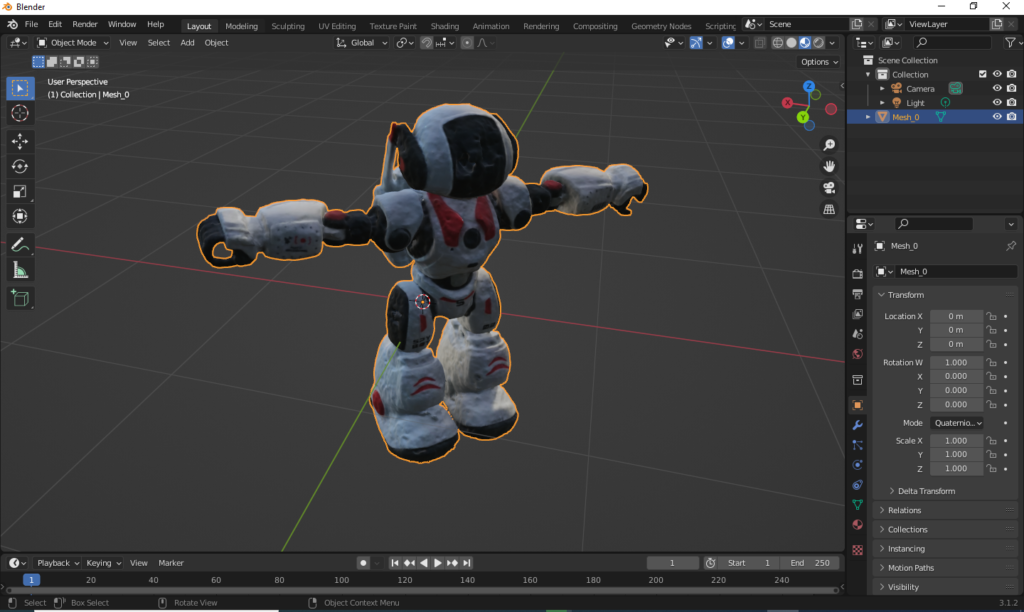

The amazing thing about NeRF renders is that they can handle light reflections and bounces in a very realistic manner. This makes it a good fit for VFX work. We can also export a textured 3D-model from the Luma AI app, but it’s not as impressive as rendering with NeRFs. It’s still quite good compared to a basic photogrammetry process, especially considering that the surface of our object was quite reflective. Here’s a screenshot from Blender:

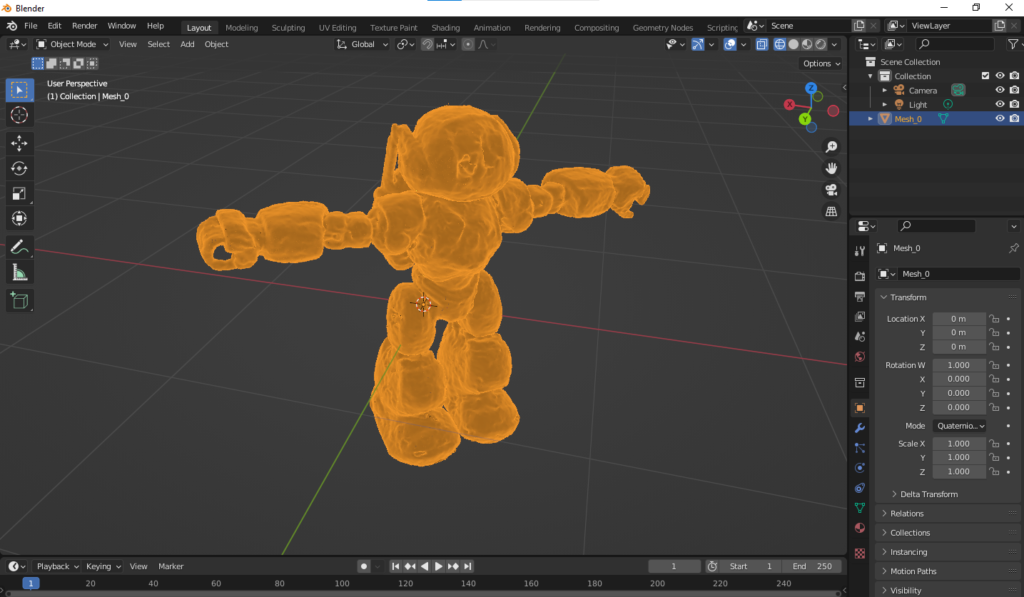

Here is how the mesh looks like (very dense):

Here’s another cool shot from a Robotime Ball Parkour toy: